Overview

Introduction

Monstera is a framework that allows you to write stateful application logic in pure Go with all data in memory or on disk without worrying about scalability and availability. Monstera takes care of replication, sharding, snapshotting, and rebalancing.

Monstera is a half-technical and half-mental framework. The tech part of it has a pretty small surface. It leaves a lot up to you to implement. But sticking to the framework principles will ensure:

- Applications are insanely fast and efficient

- Cluster is horizontally scalable

- Business logic is easily testable

- Local development is enjoyable

Data and compute are brought together into a single process. You are free to use any in-memory data structure or embedded database. By eliminating network calls and limited query languages for communication with external databases, you are able to solve problems which are known to be hard in distributed systems with just a few lines of your favorite programming language as you would do it on a whiteboard.

This is very different from stored procedures in SQL databases, which are considered harmful for two main reasons. First, business logic is spread between two places: application and database. Second, stored procedures are written in some language that is typically less capable than a normal programming language that is used in the main application business logic. Stored procedures lack debugging, monitoring, unit tests, logging, etc. In Monstera all logic is written in Go in one place.

Components

The main components of the system are stateful application cores. They are modeled as State Machines. Application cores are fully self-contained and do not have any side effects. There are two types of operations on application cores:

- Updates: any operation that modifies internal state along the way can also return arbitrary results.

- Reads: any operation that only returns some results.

All updates are replicated before they are applied to an application core. Updates are applied sequentially in a single thread. Essentially, it gives you serializable transactions out of the box, which allows you to perform complex operations with a simple and highly testable code.

Reads are performed in parallel with updates or other reads. Reads can be optionally performed on follower replicas when stale data is acceptable, and there is a need to increase read throughput.

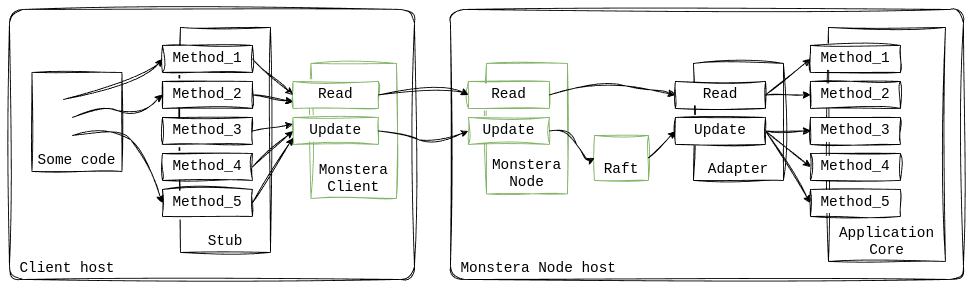

Monstera framework itself (components colored green on the diagram) handles only binary blobs for requests and responses. It can do only abstract reads and updates and does not know anything about application core logic and interface. There are two components on each side that make it more friendly: a stub and an adapter.

A stub implements the high-level interface of your application. Any code interacts with applications in Monstera cluster only via stubs. A stub:

- Interacts with the cluster via the Monstera client.

- Keeps a mapping of methods to application cores (can be several of them).

- Knows which methods are reads and which methods are updates.

- Knows which read methods should be called on a leader only and which methods are ok to call on followers.

- Extracts shard keys from requests.

- Marshalls requests and unmarshalls responses.

An adapter connects binary speaking Monstera with your Go application core. The job of an adapter is:

- To keep a mapping of requests to methods on the application core.

- To unmarshall requests and marshall responses.

Stubs, adapters, and their APIs are code-generated by Monstera tooling from a YAML config.

Sharding and replication

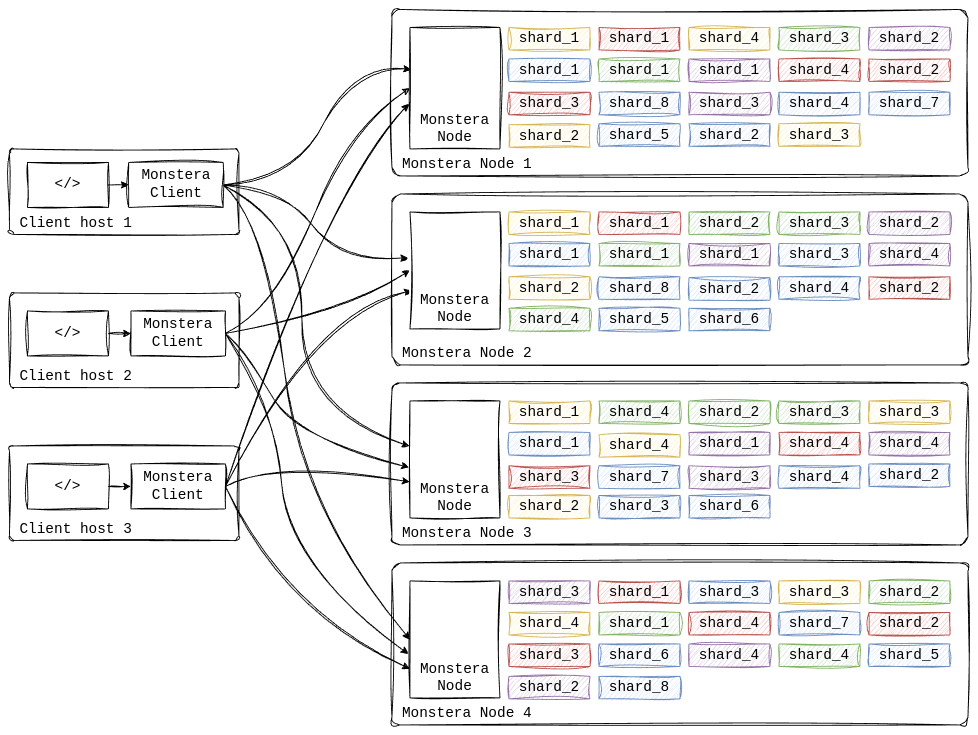

An application core implements some business domain that can be broken down into units of work (see Units of work for more info). Units have unique keys that in total define the keyspace of that application core. This keyspace is divided into shards (ranges of the keyspace) of the size small enough to fit into a single machine. When a shard grows, it is further split and moved to another machine.

Each shard is replicated with a configurable replication factor. Replicas of a shard form a Raft group. Full Monstera cluster has hundreds of independent Raft groups running at the same time. The cluster can be deployed with no downtime even under load and can sustain partial loss of nodes (1 or more, depending on the replication factor).

A Monstera cluster consists of several stateful nodes (minimum 3, no maximum limit). A client is an application that interacts with Monstera application cores. Typically, clients are stateless applications: web servers or background workers. A single cluster can handle multiple application cores (colored differently on the diagram above), which ensures better resource utilization. The mapping of replicas to nodes is pushed to each client, there is no single service that is responsible for managing the mapping. That minimizes the number of network calls and points of failure.

Examples

There are several examples of how to build applications with Monstera framework.

- Grackle github.com/evrblk/grackle - a production ready system for distributed synchronization primitives.

- Toy examples that are based on popular System Design type interview questions

github.com/evrblk/monstera-example. Examples are complete and runnable.

Go to repository and follow

README.md.